Safe Development, Assurance, and Operation of AI-based Functions for Connected Automated Mobility

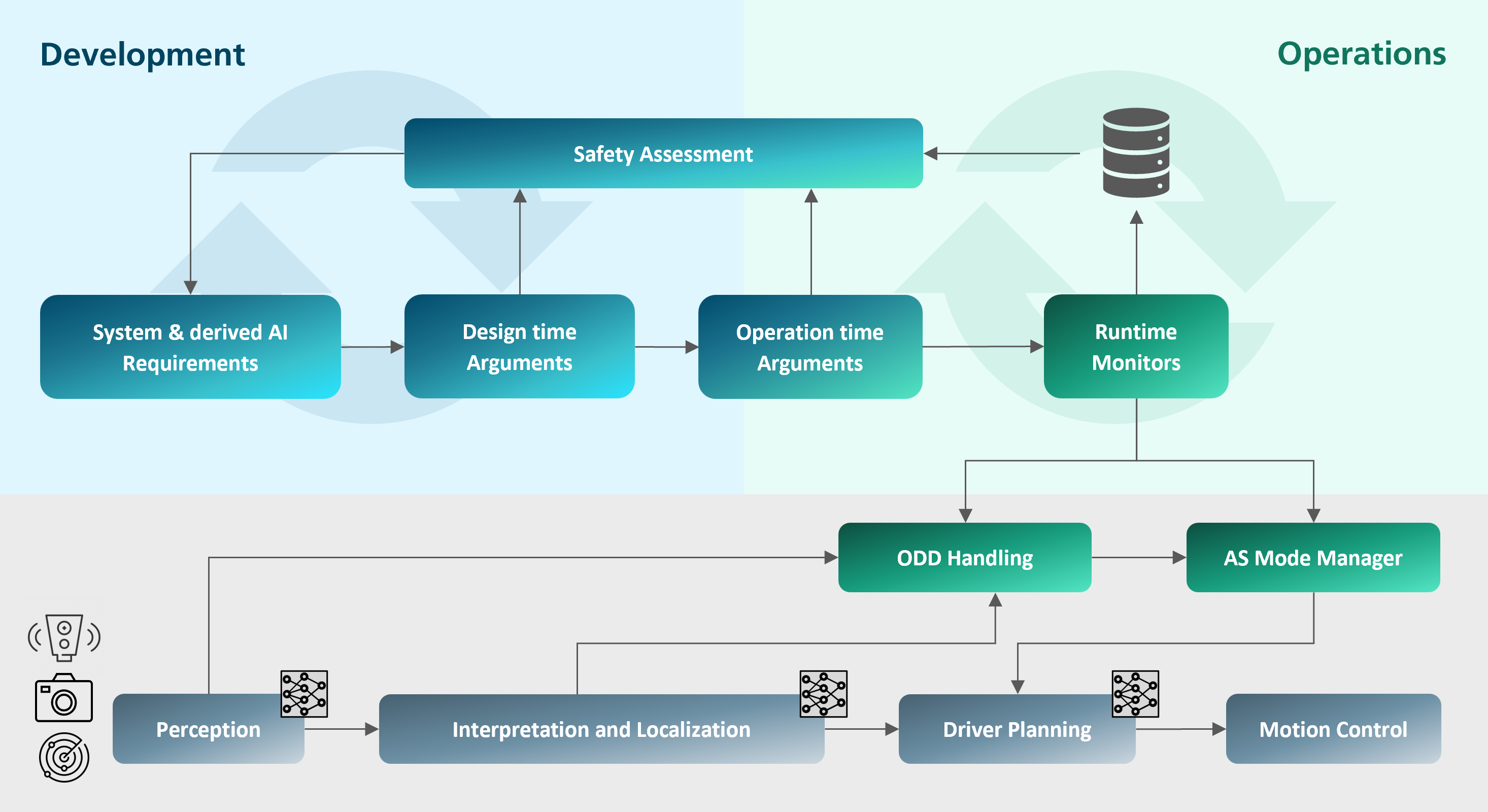

Continuous Safety Assurance is a comprehensive approach to bring AI-based driving functions safely and quickly into operation. Starting from clearly defined safety goals, acceptable residual risk, and a precise ODD specification, we support you in creating guidelines for architecture, metrics, and efficient verification – powered by safe LLMs/agents.

Building on this, structured, evidence-based safety cases with confidence assessment and standards-compliant arguments (ISO PAS 8800, SOTIF, EU AI Act) provide the foundation for approval. For operation, we support you in setting up monitoring and safety performance indicators, governance mechanisms, and triggers for safe updates, as well as the feedback of runtime evidence into your safety argumentation. Rapid prototyping accelerates integration and validation in standardized environments.

This creates a closed loop of development, operation, and learning: risks remain controlled, compliance is demonstrable, and your solution improves iteratively – with measurable safety.

Application areas range from small neural networks on microcontrollers (Embedded AI) – e.g., as virtual sensors in engine control – to complex AI-based functions in the vehicle or infrastructure for perception, trajectory planning, or end-to-end AI architectures from object detection to decision-making.

We offer support in the following areas:

- Development of safety-critical systems: Goals/residual risk/ODD, metrics, safe ML architectures/monitors, efficient V&V, LLMs/agents.

- Continuous safety assurance in operation: Assumption and SPI monitoring, early mitigation, root cause analysis, safe OTA updates.

- Evidence-based safety argumentation: Structured safety cases, confidence assessment, compliance; dynamic cases with runtime evidence and re-argumentation.

- Rapid prototyping: Development of new AI functions or V&V of your AI functions in a standardized architecture using our APIKS framework.

Fraunhofer Institute for Cognitive Systems IKS

Fraunhofer Institute for Cognitive Systems IKS